Stories involving such tasks are almost impossible to plan for effectively. Without knowing at least roughly what needs to be done, nobody can accurately predict how long it will take to implement. Such vague stories often introduce a lot of variability into the delivery process, and the short-term statistical analysis that you would use for regular work won’t really apply to planning such stories.

In iterative processes where teams commit at the start of an iteration to deliver stories, such vague stories can lead to nasty surprises towards the end.

Some teams solve this by writing fake user stories, that mostly follow the pattern 'As a developer, I want to understand how the new external API works’. They are rarely expressed in a way that would make them appear as valuable from the perspective of business stakeholders. It’s almost impossible to define any kind of acceptance criteria for such stories.

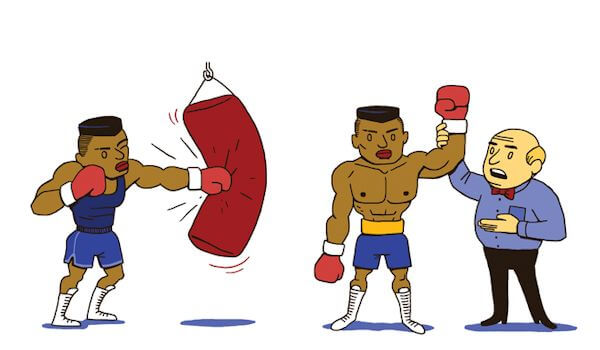

A good way to deal with such situations is to explicitly split the research tasks into a separate story with a goal of its own. A helpful way of thinking about this is that a story should be either about learning or earning.

- Learning stories help stakeholders plan better.

- Earning stories help to deliver value to end-users.

The big difference between a learning story and a research task is that the story has an explicit goal. The goal should be valuable to a stakeholder and providing enough information so they can make a planning decision. The acceptance criteria for learning stories is easy to specify — you need to work with stakeholders to identify what kind of information they would need in order to approve or reject the work. Then decide how much time the stakeholders want to invest in getting the information – effectively time-boxing the learning story.

Key benefits

Planning for time-boxed learning stories avoids research turning into vague, uncontrolled work that introduces variability. It also removes the need for research outside the regular delivery cycle, preventing long upfront analysis.

Having explicit learning items helps to manage short-term capacity and prevents overloading a team with other stories. This is particularly important for teams who track velocity with numerical story points.

When the learning and earning aspects of stories are split, teams don’t have to pretend to estimate learning, or to create user stories with no apparent value. At the same time, this idea also opens up the possibility that the learning ends without a clear conclusion, or even with a decision that implementation would be impractical. This prevents nasty surprises for stakeholders, because a team only commits to the learning story, instead of committing to deliver something vague.

How to make it work

Create a clear time budget for learning stories – how big depends on the importance of the information you’re trying to get. This helps you to balance learning stories against earning stories. It will prevent learning-only iterations where nothing useful gets built, and stop the team from spending too much time solving difficult problems that potentially do not need to be solved. If the learning story ends up without a clear solution, the stakeholders can decide if they want to invest more in learning or try an alternative solution.

Get the team to decide on the output of a learning story before starting. What kind of information do stakeholders need for future planning? How much detail and precision do you need to provide? Will a quick-and-dirty prototype be needed to validate a solution with external users, or is a list of key risks and recommendations enough? This ensures that everyone is on the same page and helps you decide who needs to be involved in the research.

Ideally, learning stories should be tackled by cross-functional delivery teams who will later implement the conclusions, not by separate groups. This avoids the need to create documentation, hand work over or transfer knowledge.

Finally, in Practices For Scaling Lean and Agile Development, Larman and Vodde warn against treating normal solution design as fake research work, especially if it leads to solution design documentation for other people to implement. They suggest that research tasks should be reserved for 'study far outside the familiar’.

Gojko Adzic

Autor przełomowych narzędzi jak "Specification by Example" i "Impact Mapping", które zrewolucjonizowały podejście do testowania i planowania pracy. Jako uznany trener i konsultant, Gojko specjalizuje się w praktycznym zastosowaniu metod Agile i Lean, pomagając zespołom programistycznym osiągać lepsze wyniki.